Dimitar Mišev

2025 Recap

In this post I look back on 2025. From diving into the mechanics of AI, daily running routines fueled by a newfound love for coffee, to some of the joys and logistics of raising a curious six-year-old. A year of interesting challenges and some new passions.

Running

I have attempted running several times in the past, repeating a familiar cycle every few years: start enthusiastically with 2-3km at a time and quickly end up with knee pains once I push over 5-6km. My biggest success was running a 10k race in ~55 min in 2014, barely finishing due to crippling knee pains.

In the summer of 2020 it got serious. The first run ended in sharp knee pain after ~700 meters, but I limped on in a fast walk for 10 more km. I stubbornly pushed through 5-6 more months of run-walking through a seemingly never-ending knee pain. The pain eventually dissipated. Slowly I accumulated experience through 2021, and in April 2022 I even ran a relatively successful marathon in 3:32 hours (with plain, old tech Kinvara 12 shoes). It was going well. But then my kid started sharing pathogens from the Kindergarten too enthusiastically, and a few months of regular sickness later I gave up on the running.

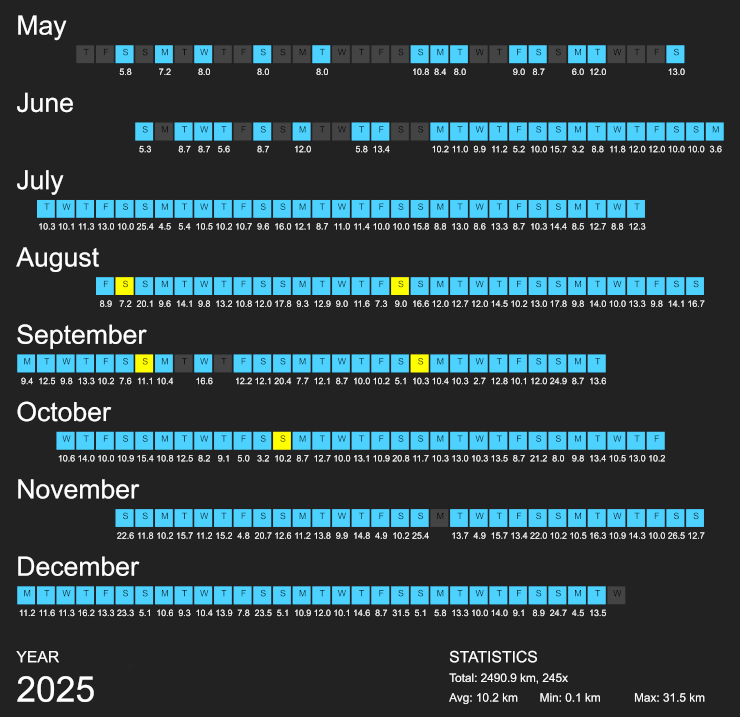

This year the viruses deal finally started to get more reasonable and manageable, so I decided to try it again. I started slowly around May, and from mid-June on settled into a rhythm of running some amount every single day.

Running daily has been really really good, there are so many benefits that it’s better dedicating a separate post to it. After some experimentation I have come down to a regular and straightforward schedule that boils down to roughly an hour of easy running for every hour of fast running.

An hour of slow and easy running in heart rate zone 1 on Monday, then an hour of faster running on Tuesday structured as 1-3km intervals with 1-2 min slow recovery jog in between (plus 10 min warmup and cooldown); this is done at around the border of zones 3 and 4, fast enough to be fun and exhilarating while still feeling comfortable. The slow/fast cycle repeats, until a longer run of two hours on Saturday (or more if training for a marathon), at around the border of zones 2 and 3. Finally, an hour of slow recovery running on Sunday. My paces are 5:50-6:10 min/km for the easy recovery runs, 4:30-4:40 for the intervals (4:50-5:00 on average for the whole workout), and 4:50-5:00 for the long run of 24-32km. Starting from zero where I can barely run a few km, I need about three months to reach this fitness level that is right around the upper bound of what my body can handle, i.e. pushing much above this eventually leads to some kind of injury or illness.

This is quite similar to the “Norwegian Singles” approach, the main difference being the fast long run at steady pace on Saturday, instead of another interval session. So far it feels like this schedule and principles lead to sustainable training in the long-term, while also being easily adaptable to races or increasing / decreasing the training load as needed. The high-frequency, fixed structure allows to build accurate intuition about your capacity for running on any given day, which is extremely valuable in preventing overtraining and injuries.

I recorded some new personal bests: 5k in 20:29 (from 21:15) and 10k in 42:06 (from 43:10). More importantly, I’ve tremendously enjoyed it and am so grateful that I’m able to just go out whenever and run for one-two hours. It’s precious to spend some time in nature every day, be intimately aware of changes in the weather and seasons, regularly cross paths with wildlife, see beautiful sunrises and sunsets. I took the picture below during a run along my favorite route, and at the end of this post is the same view at a different time and season.

AI

I was vaguely aware of GPT-2 and then GPT-3 when they were released. But it sounded like a scientific development in a field distant to mine (a specialized niche in databases), and I didn’t pay too much attention. Then ChatGPT came out.

In 2023 and 2024 I mostly watched from the sides. The chatbots did not offer that much beyond entertainment. In 2025 things eventually progressed into something actually useful in practice. Perhaps DeepSeek kicked everyone into high gear with the open-weights release of V3 and R1.

At some point towards the end of 2024 it started to bother me that I don’t really understand much about something that was all the talk wherever you look. So as a kind of 2025 New Year resolution I set a goal to read up on LLMs and try to understand how they actually work under the hood. It’s a fascinating field, once you get past all the jargon and various tools and frameworks.

I even got an NVIDIA RTX 3090 with 24GB VRAM in Dec 2024, to replace the small 8GB RTX 3050 that I had. There is no better way to make concepts stick than to really try and run stuff. The GPU turned out very non-trivial to set up, however, a huge sink of time.

First, it needed a more powerful supply, and replacing a power supply is never fun.

Second, it needed water cooling. I bought it used on eBay and it came with a backplate for water cooling, instead of regular plug and play fans. It took me a while to figure out that I need to buy a suitable pump and fan to connect to it. I got just enough help at the alphacool forum to be able to piece together an order for the correct parts. Assembling everything was even more nerve-wracking, it is surprisingly hard to find information on this topic. After a few days of guessing, reading and watching videos, I finally had things in order. As a result, now I know roughly how to deal with water cooling, connect everything to the motherboard, and tune the fans on a Linux. And the machine is absolutely cool and quiet.

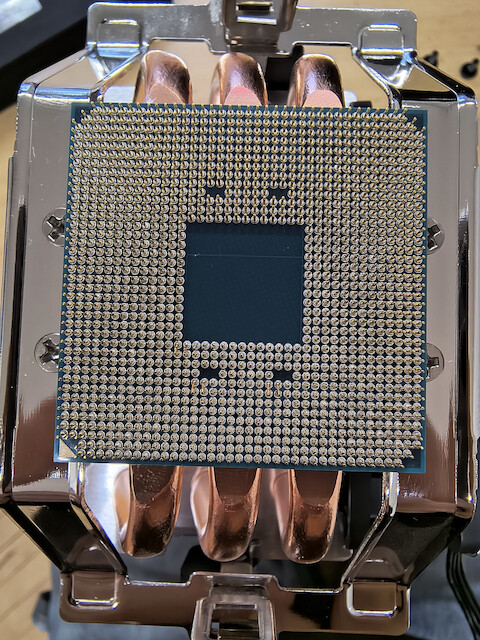

Third, the GPU is really heavy and lacked a metal plate to screw it to the case. I hacked up some flimsy support, but a few months later it turned out to be not good enough. The machine could not boot reliably and after a day of trying various driver/kernel version combos I eventually realized that the PCIe port itself is damaged. The second PCIe port on my motherboard was not a good option, because it has lower bandwidth and the water pump/fan didn’t fit properly anymore. So I bought a new motherboard to replace the old one. Even less fun than replacing a power supply.

New motherboard in, and then.. the machine would not boot, zero sign of life. Everything had to come out one by one again, until I uncovered a dozen twisted pins on the CPU. With a screwdriver I did my best to straighten everything up. Miraculously, it worked and booted just fine in the end. And lesson learned: I placed the PC horizontally with a prominent DO NOT TOUCH note.

I have been running various LLMs on this GPU, updating them as new, better ones are released. Models of up to 32B parameters run very well as long as the weights are quantized and along with the context can fit in the GPU RAM. Qwen3-Coder-30B-A3B at Q4_K_XL quantization and Mistral-Small-3.2-24B-Instruct-2506 at Q6_K_XL are extremely fast to run with reasonable context of 55000 and 12000 tokens, respectively. Along with the dense Qwen3 32B, these are the three models I play with currently. At the start I used llama.cpp to run models, but nowadays I use Ollama with Open WebUI for the chat interface which simplifies a lot of the details and allows to easily switch between models.

My local models are quite behind in capabilities compared to the much larger state of the art ones. The pros are mainly that whatever prompts I run are private. One could get a larger GPU or stuff multiple GPUs in order to run a bigger model locally. It’s actually quite common to go down a deep rabbit hole on the quest of running ever larger models, just take a peek at r/LocalLLaMA. However, the cost does not scale well at all for private use. It would be like buying a bus, or a plane, for transporting just one person. One GPU is fine cost-wise, millions of gamers have one after all.

Besides LLMs, I have also used the GPU a bit at work for running various foundational models for remote-sensing directly with PyTorch. The smaller ones run fine with just a CPU, but the larger ones that also combine some kind of query answering or caption generation usually work only with CUDA and hence require a GPU.

Parenting

My son started school this year, a few months before turning six. He already had some fundamental skills under the belt:

- Reading fluently, writing relatively alright. English is just hard for spelling/writing, there aren’t many rules, and it takes a lot of reading to build some intuition.

- Basic arithmetic along with a few advanced concepts like even/odd, prime numbers, square root. The Numberblocks is an incredible show teaching all this, I can’t recommend it enough.

- Basic knowledge about geography, like continents and various countries, quite a lot about astronomy, and ecology / biology. We’ve watched too many documentaries, and a few Octonauts :-)

Academically, I believe he could’ve easily started with 2nd, or even 3rd grade. We didn’t think he’s fit age-wise for that, though, and did not push for it. His school is actually a little unique, in that classes are of mixed grades: 1st and 3rd graders sit in one class together, and likewise 2nd and 4th graders. I honestly don’t fully understand how this works in practice; likely not too well, as the school is already rolling back the program. But he’s seemingly fit well with the older kids in the class, judging by all the nonsense he picks up about phones, TikTok memes, and various games. Randomly we’ll hear hilarious phrases exclaimed extremely seriously, like “ey mama digga, that’s not how you do it!” :D

I enjoy teaching and making him aware of a wide range of subjects. But beyond the minimum basics of reading/writing and arithmetic, I avoid venturing too deeply into various facts and algorithms (e.g. manually multiplying large numbers) that they will cover in school anyway. In the age of computers, from calculators to AI, it is not worth spending too much time and energy on memorizing details and doing things by hand (a.k.a. tactics in chess). We will not beat the machines at it. Way more important is fostering the development of curiosity and research skills, critical thinking, general problem solving intuition, strategic big-picture planning, and a creative mindset.

Two fantastic first books on this topic that I could recommend: “Math from Three to Seven: The Story of a Mathematical Circle for Preschoolers” by Alexander Zvonkin, as well as “Range: How Generalists Triumph in a Specialized World” by David Epstein.

At some point I set up a small desk with a mini-PC running Kubuntu 24.04. Basically a smaller version of my own setup. He’s already proficient with the keyboard and mouse, and slowly getting the ropes of basic stuff like copy-paste, the filesystem, terminal, programming with a bit of Python, etc. Besides reading and writing, I consider fast touch typing a pretty fundamental skill nowadays. It’s been difficult to teach, though, as it’s hard to find a tool interesting enough for a child. Also his hands are much smaller which certainly makes it harder to do. For now, I just remind him occasionally to try to use both hands when typing and maybe keep them on the home row.

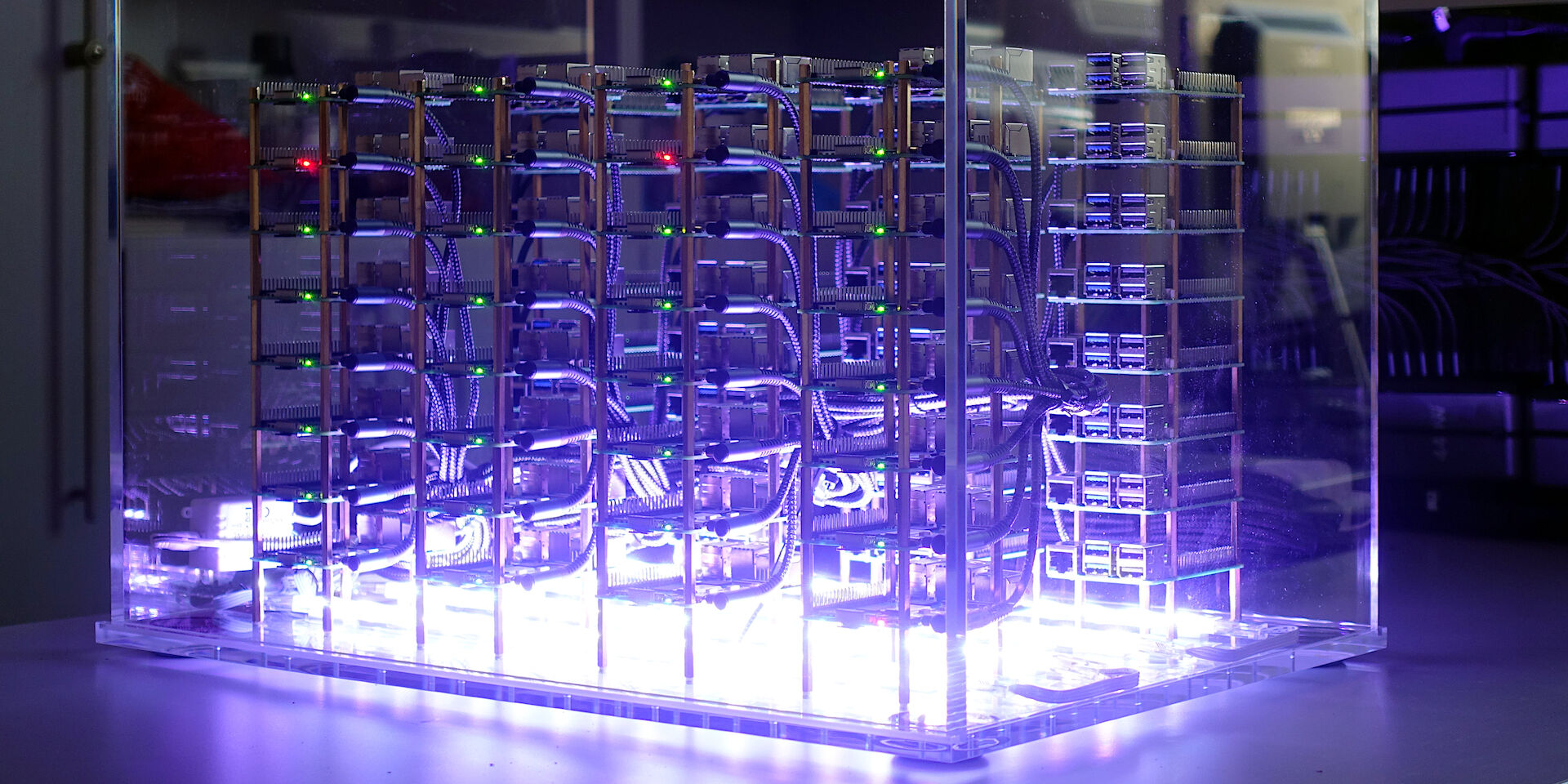

Work

The most interesting part this year was building a cluster of 64x Raspberry Pi 5s. It’s a peer-to-peer federation of rasdaman database nodes, that allows us to perform various realistic experiments on query distribution in a constrained and unreliable environment. This was a fun detour from the kind of work we usually do, and I thought it fits well to mention here. We showed it at the Bremen Space Tech Expo in November, and there was a lot of interest and positive feedback.

Credits for the hardware design and assembly go to my boss, Prof. Dr. Peter Baumann. I worked on setting up the software and optimizing the network setup to minimize latency, which is pretty bad over Wi-Fi 5. By far the biggest challenge is powering 64 little computers. There are compact multi-port USB-C power supplies which should do the job in theory based on their specs, but the ones we got just do not work so well in practice. A random 10-20% of the nodes eventually die due to undervoltage problems (the red leds in the picture below).

Coffee

Finally this year I got into drinking coffee. Really late to the party :-) I’ve tried it a few times in the past, but the taste never impressed me, nor the digestive irritation that usually followed.

In the summer I traveled to the Calabria region in Italy for a project meeting. It occurred to me to try coffee; the thinking went, Italy is famous for good coffee and I’m here, so why not. The meeting was in the hills at a gorgeous old farm for olives, very cozy and informal. Coffee was prepared in several moka pots of various sizes, and I took note.

Before going back I picked up a local Calabrian brand of coffee and back at home got the classic Bialetti Moka Express. What really excited me more than drinking the coffee at first was the prospect of optimizing the brewing, hehe. Eventually I got a manual coffee grinder from AliExpress ( TIMEMORE C3ESP PRO) for 30€ that works fabulously. I really enjoy the whole manual process and do not think I’d ever get any type of automated machine.

But, I’m not a heavy coffee drinker at all. I have at most one double espresso in the morning, and only before faster running workouts. So about 3x a week. Combined with the right music this gets me in an insanely good mood for running. The rush of dopamine (or whatever it is) right after the coffee is just bonkers, it pretty much always puts a huge smile on my face or gets me singing out loud during the run.

Car

Also this year I finally got a car. I’ve resisted getting one before because

- It’s not necessary, we can get anywhere by bike or public transport just fine.

- Modern cars are ridiculously complex and I dislike owning stuff I don’t understand.

- Climate change and all that.

- Maintenance and repairs is a pain, especially with older cars.

- Expensive compared to the alternatives.

- It silently leads to a more sedentary life.

- Waiting in traffic is painful, and driving all in all is a lot more stresful than sitting in the tram / train.

Some reasons that led me to change my mind this year:

- We are now three people so the car is almost full on most trips. At six my son has to pay for public transport as well and the cost for three gets pretty close to the car cost.

- It does save a lot of time, it’s usually 2x faster.

- I found an excellent deal for a brand new full hybrid model.